About me

Welcome to my website! I’m a postdoc working with Linda Drijvers in the Communicative Brain group at the Max Planck Institute for Psycholinguistics. In my research, I use computational models of visual processing (artificial neural networks, models of reading), neuroimaging and behavioral experiments. Apart from doing research, I enjoy teaching and organizing events.

Recent projects

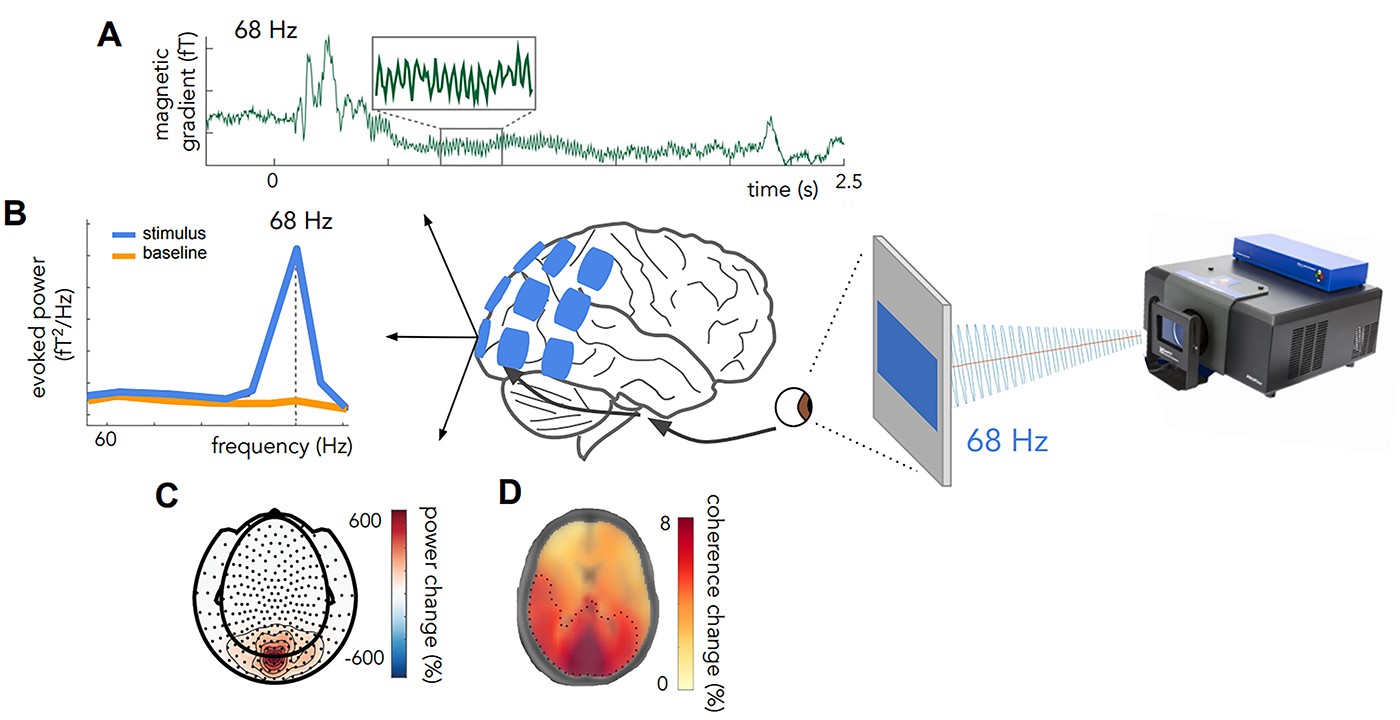

- Rapid invisible frequency tagging (RIFT): a promising technique to study neural and cognitive processing using naturalistic paradigms published

Frequency tagging has been successfully used to investigate selective stimulus processing in electroencephalography (EEG) or magnetoencephalography (MEG) studies. Recently, new projectors have been developed that allow for frequency tagging at higher frequencies (>60 Hz). This technique, rapid invisible frequency tagging (RIFT), provides two crucial advantages over low-frequency tagging as (i) it leaves low-frequency oscillations unperturbed, and thus open for investigation, and ii) it can render the tagging invisible, resulting in more naturalistic paradigms and a lack of participant awareness. In this feature article, we provide a brief overview of RIFT applications that could advance our current understanding of oscillations involved in cognitive processes. To showcase the use of RIFT in a broad variety of domains, we highlight recent findings using RIFT and provide an overview of its future promises and challenges.

RIFT generates SSVEPs in the brain at the tagging frequencies. A) Event-related fields show responses at the tagged frequency (68 Hz). B) Power at visual sensors shows a peak at the tagged frequency of the visual stimulus C). The power increase at the tagged frequency is strongest over occipital regions. D) Coherence change in percentage when comparing coherence values in a stimulus window to a post-stimulus baseline for 68 Hz. Dotted line indicates region > 5%; A, B, C adapted from Drijvers et al. (2021).

- Using RIFT to study the role of lower frequency oscillations in sensory processing and audiovisual integration

During communication in real-life settings, our brain needs to integrate auditory information (such as speech) with visual information (visible speech, co-speech gestures) in order to form a unified percept. In addition, in order to efficiently understand another person, we need to select the relevant sources of information, while preventing interference from irrelevant events (other people talking, meaningless movements). In the current study, we use rapid invisible frequency tagging (RIFT) and magnetoencephalography (MEG) to investigate whether the integration and interaction of audiovisual information might be supported by low-frequency phase synchronization between regions. We presented participants with videos of an actress uttering action verbs (auditory; tagged at 58 Hz) accompanied with visual gestures. To manipulate spatial attention, we included an attentional cue and presented the visual information with different tagging frequencies left and right of fixation (visual; attended stimulus tagged at 65 Hz; unattended stimulus tagged at 63 Hz). Integration difficulty was manipulated by lower-order auditory factors (clear/degraded speech) and higher-order visual factors (congruent/incongruent gesture). This approach enables us to investigate oscillatory responses to multiple competing stimuli and in turn lays the groundwork for future studies using more natural communication paradigms.